Grand Prize – Artistic Exploration

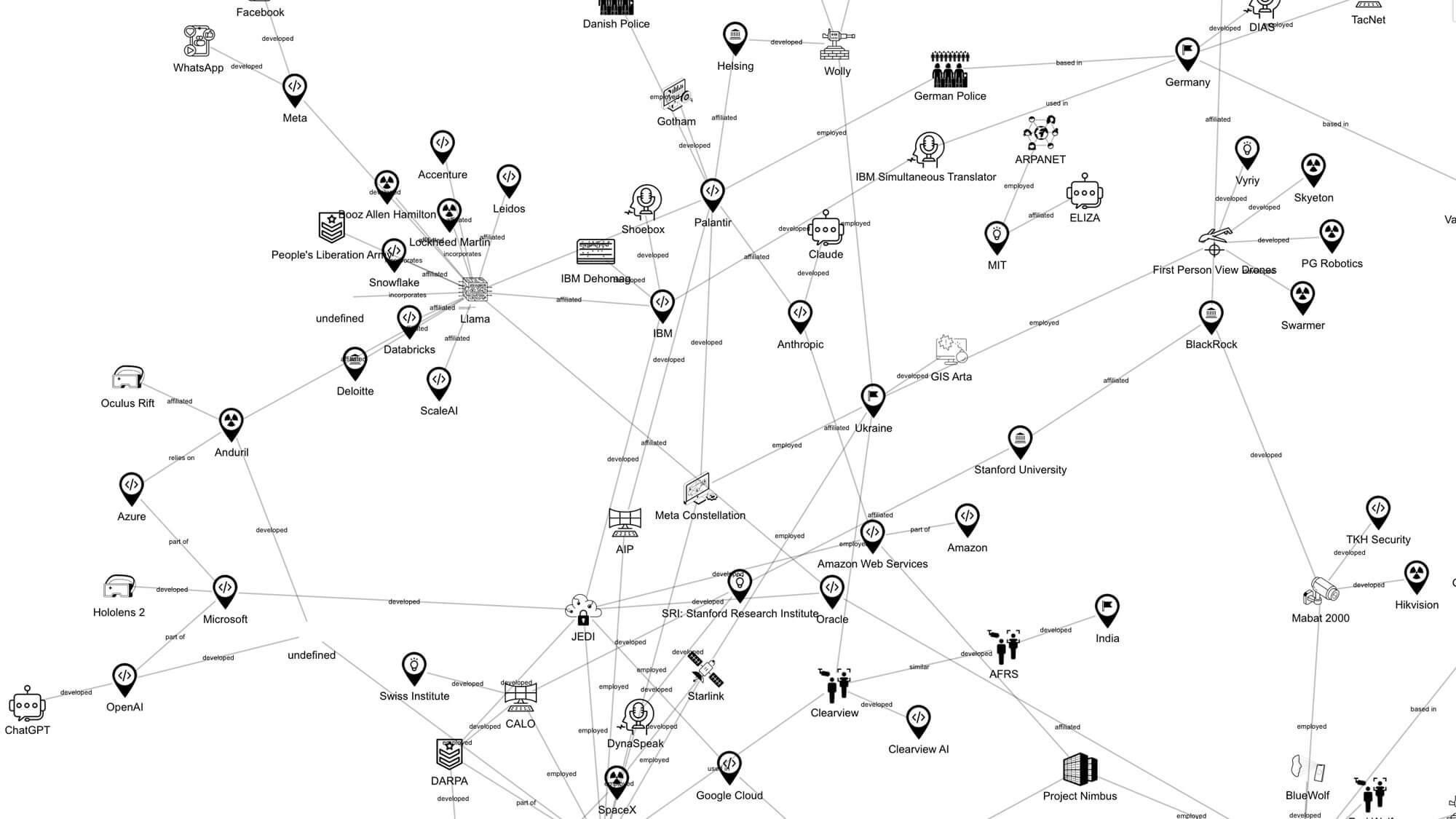

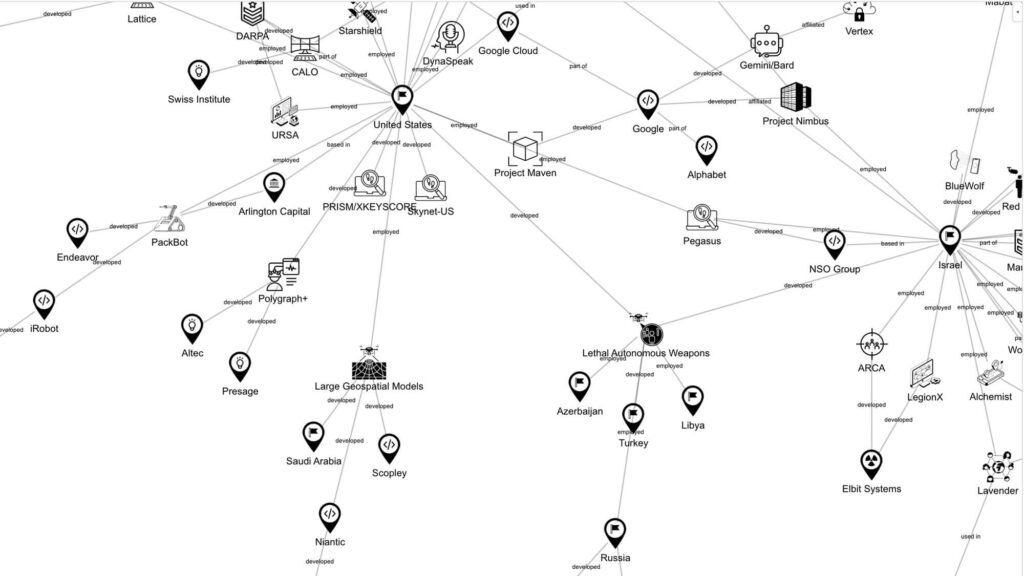

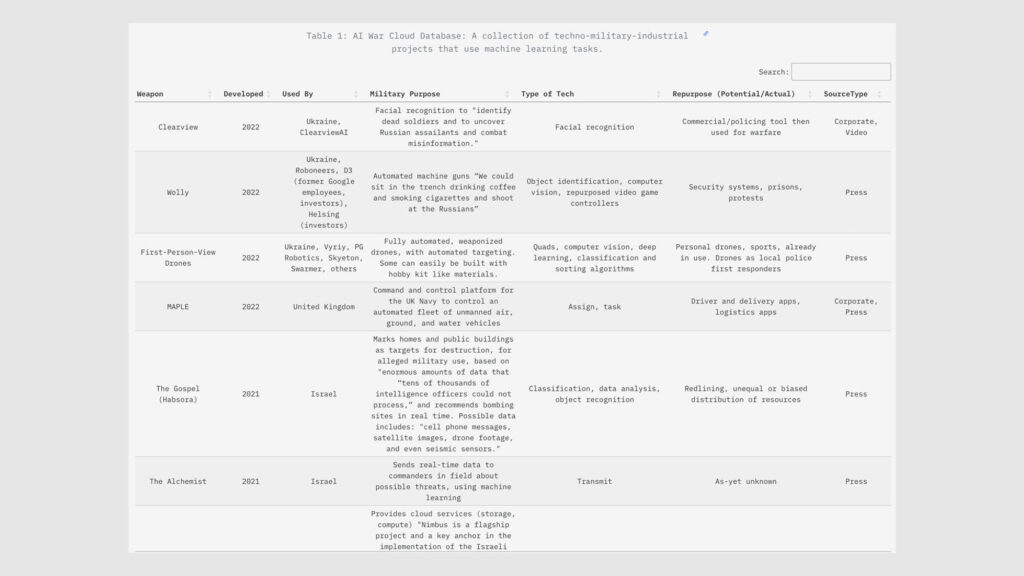

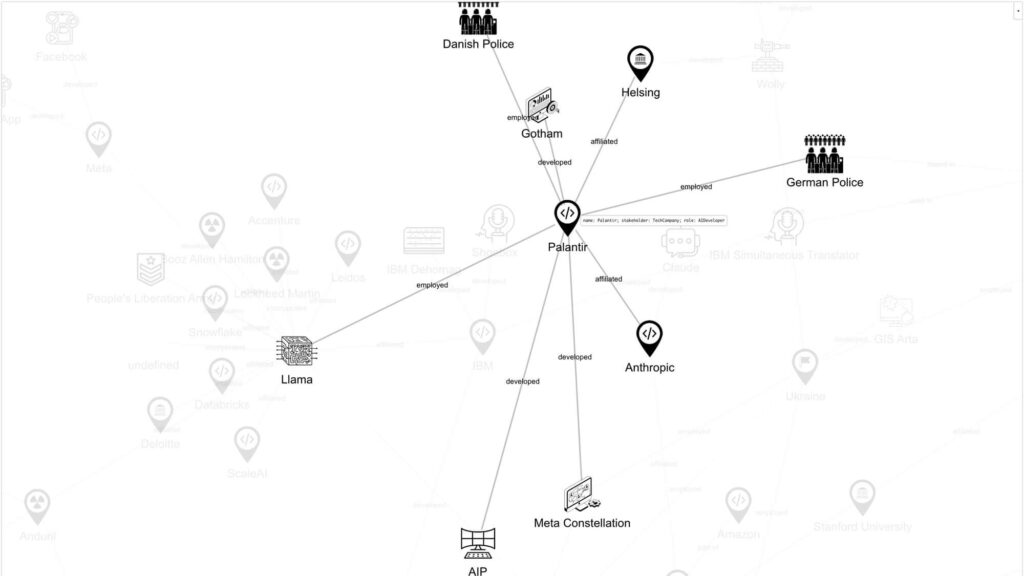

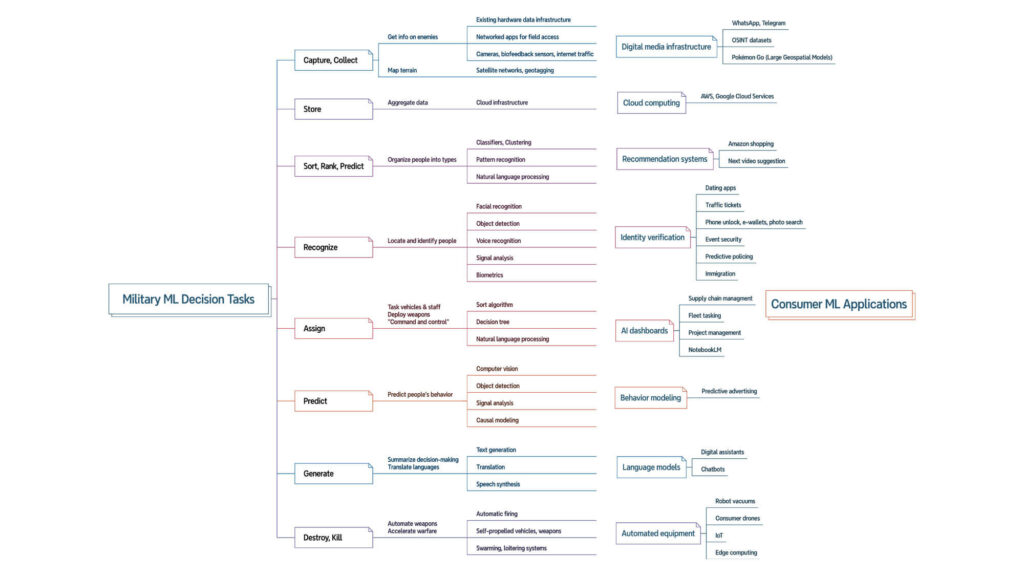

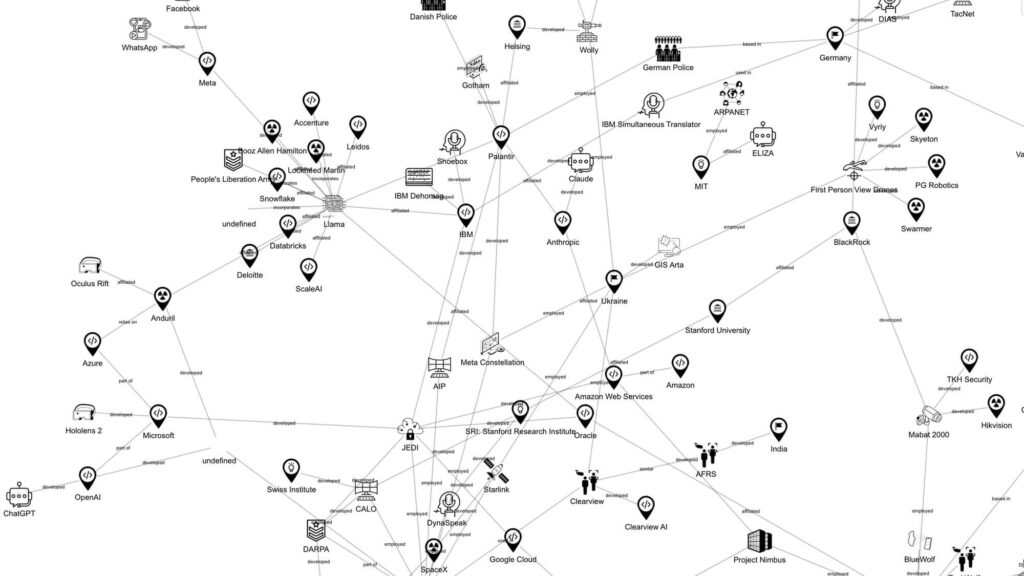

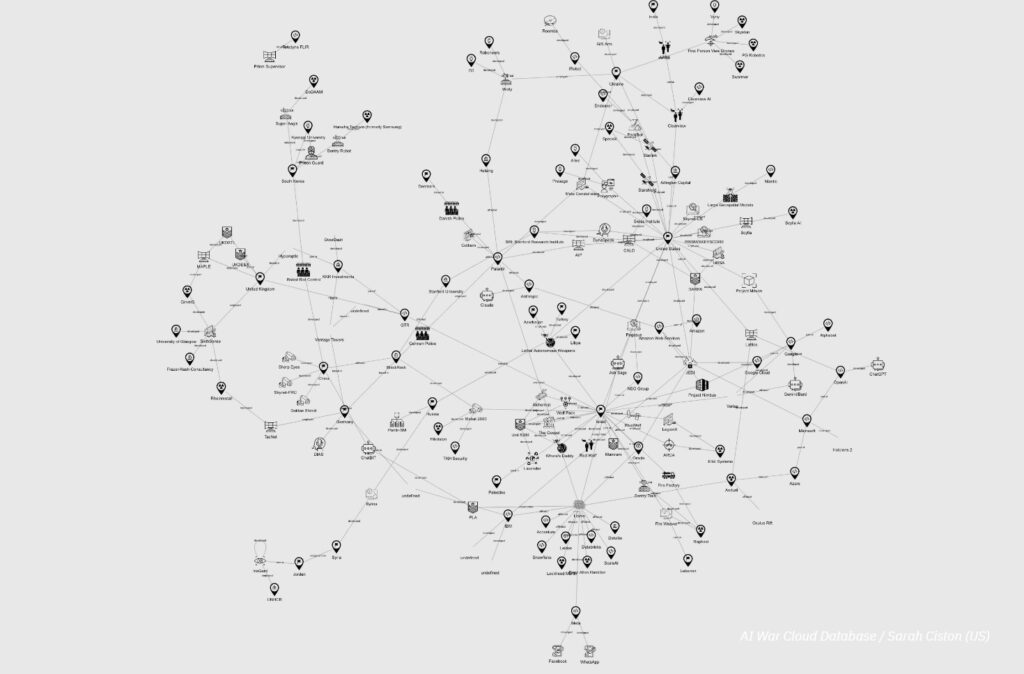

What responsibilities do users and makers have in choosing AI tools, when their development can also lead to deadly outcomes at massive scales? AI War Cloud Database catalogues the systems used to make automated decisions in warfare, and it maps where the same types of tools appear in smartphones and popular social platforms.

The iris scanner tested on refugees becomes technology for a crypto wallet. The robot vacuum mapping homes becomes a bomb robot abroad. The chatbot trained by low-wage workers determines the results of your next internet search and also the next drone target or the force of the next bomb.

AI War Cloud Database makes these latent connections plain. It builds a taxonomy of AI decision-making systems, shows how they are used in both military and commercial contexts, and tracks which nations and corporations are collaborating to deploy them. It offers an interactive interface for interrogating both automated warfare and the seemingly innocuous devices we use every day.

“Software-defined warfare” is upscaling violence and normalizing its logics—the US army claims it has shortened the time it takes to select and execute a target from 20 minutes to 20 seconds.1 By understanding how warfare uses machine learning, AI War Cloud deconstructs the idea of ‘AI decision support’ as a misdirect from the larger problems common to all AI systems, which amplify, accelerate, and neutral-wash biased human processes. It questions the faulty reliance on ‘humans in the loop’ when decisions have been algorithmically constructed.

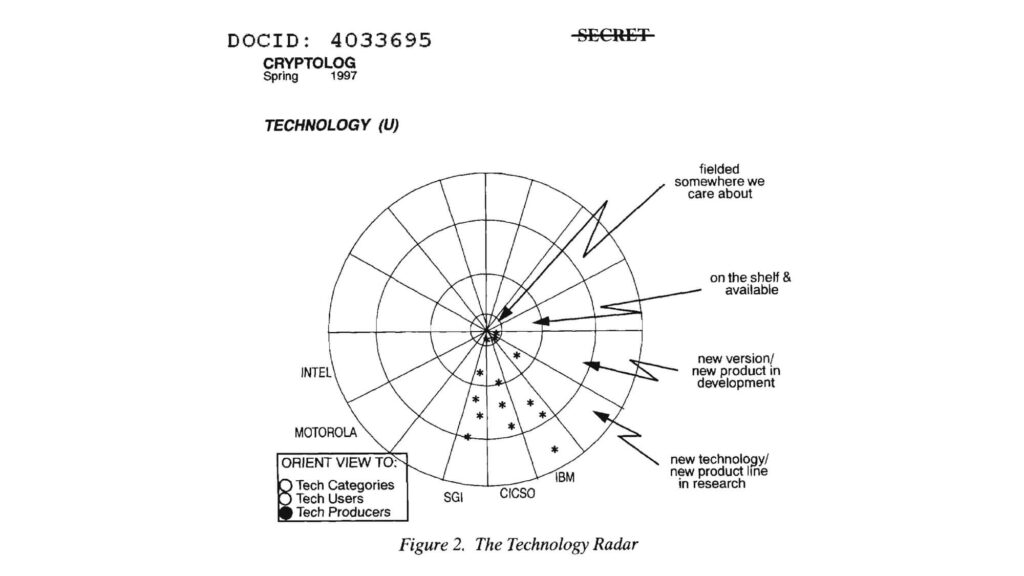

AI War Cloud also provides a framework to question the extrajudicial shifts in power that are occurring as tech giants and tiny startups become defense contractors, as their products become arbiters of acceptable casualties. Its database includes more than 50 examples spanning the last 25 years. Continually updated, these remain a small sample of the rapidly growing field. They are contextualized by a selection of commercial and historical examples to help show how connections have developed over time, across borders, and across domains—complicating attempts at regulation and critical appraisal.

These systems are “neither new, nor newly urgent,” as Sarah T. Hamid says. “[…T]hey exemplify more of what Ruth Wilson Gilmore articulates as the ‘changing same.’”2 Inspired in part by the histories of tech and power mapped by Kate Crawford and Vladan Joler’s Calculating Empires, and building on important research by groups like AirWars, Tech Inquiry, No Tech for Apartheid, and the Forum of Computer Professionals for Peace and Societal Responsibility, the database gathers many kinds of publicly available materials: journalism, scholarly articles, product descriptions from military and corporate websites, patents, technical papers, government tenders, and (where possible) source code and software documentation. The project is open-source and in process, in order to adapt as technologies and contexts change, and to welcome interdisciplinary contribution. As such, it provides a scaffold for further research, conversation, and provocation.

AI War Cloud is not a comprehensive index nor a solution. Unfathomable loss is impossible to reconcile, as it scales alongside the technological accelerations which enable that loss. The piece began as a personal strategy to witness and cope with the dailiness of AI-enabled tragedies worldwide. It is meant as a plea, encouraging intervention into the automated tools and infrastructures that support warfare.

Early computer scientist Joseph Weizenbaum asked that we fight the psychological distance created by the electronic battlefield, saying, “All of us must therefore consider whether our daily work contributes to the insanity of further armament or to genuine possibilities for peace.”3 AI War Cloud Database hopes to support the collective work needed to reckon with automated systems both on the battlefield and on our own devices.

Tracing the Invisible Lines Between Devices and Drones

How closely are commercial AI systems entangled with military technology? Awarded the STARTS Prize 2025 Grand Prize –Artistic Exploration, this project reveals hidden connections.

Footnotes

[1] Arthur Holland Michel, “Inside the Messy Ethics of Making War with Machines,” MIT Technology Review, August 16, 2023.

[2] Sarah T. Hamid, “History as Capture,” BJHS Themes 8 (January 2023): 51–64.

Ruth Wilson Gilmore, Golden Gulag: Prisons, Surplus, Crisis, and Opposition in Globalizing California (University of California Press, 2007).

[3] Joseph Weizenbaum, “Not without Us,” SIGCAS Comput. Soc. 16, no. 2–3 (August 1, 1986): 2–7.

—, “On the Impact of the Computer on Society,” Science 176, no. 4035 (1972): 609–14.

Credits

Design, programming, research, writing: Sarah Ciston

Research sources and image credits on project website.

With thanks to Claire Carroll, Kate Crawford, Ariana Dongus, Vladan Joler, Pedro Oliveira, Miller Puckette, Corbinian Ruckerbauer, Nataša Vukajlovic, Ben Wagner and the AI Futures Lab, Thorsten Wetzling, Cambridge Digital Humanities, and the Center for Advanced Internet Studies for discussions and support contributing to the work.

Biography

Sarah Ciston (US) builds critical-creative tools to bring intersectional approaches to machine learning. They are co-author of “A Critical Field Guide for Working with Machine Learning Datasets” and co-author of Inventing ELIZA: How the First Chatbot Shaped the Future of AI (MIT Press 2026). Ciston has been named an AI Newcomer by the German Society for Computing, a Google Season of Docs Fellow for p5.js/Processing Foundation, and an AI Fellow at Akademie der Künste Berlin. Currently a Research Fellow at the Center for Advanced Internet Studies, they hold a PhD in Media Arts + Practice from University of Southern California and are the founder of Code Collective: an approachable, interdisciplinary community for co-learning programming.

Jury Statement

As Artificial Intelligence becomes central to both everyday life and modern warfare, AI War Cloud Database by Sarah Ciston offers a vital and timely investigation into how these systems operate, and the consequences they carry. The work reveals how the same machine learning tools that power recommendation engines, chatbots, and predictive algorithms are also used in military decision-making, raising urgent questions about accountability, transparency, and responsibility.

Focusing on real-world systems like Palantir’s MetaConstellation and Israel’s Lavender, AI War Cloud Database shows how vast amounts of data are processed to make life-and-death decisions at speed. It highlights how these technologies are often tested on vulnerable populations in conflict zones, before being deployed domestically on the very citizens whose countries built them.

By tracing the links between commercial AI and military infrastructure, Ciston exposes a hidden feedback loop—where tools of convenience become tools of control. At a time of growing geopolitical tensions, AI arms races, and rearmament efforts across Europe and globally, this work underscores the need for democratic oversight, ethical governance, and civic awareness in shaping our technological future.

Still in the early stages of development, AI War Cloud Database is a research-driven artistic project with exceptional promise. The jury recognizes its contribution to a rapidly evolving debate on the societal and political stakes of AI. It encourages further collaboration, deeper investigation, and broader public engagement moving forward. In the spirit of the STARTS Prize, this work exemplifies the power of art and research to illuminate complex systems—and to invite us all to take part in shaping them.